Deployment options¶

H2O Hydrogen Torch offers three options to deploy a built model:

Below, each option is explained in turn.

H2O Hydrogen Torch UI¶

You can score new data on built models (experiments) that generate downloadable predictions through the H2O Hydrogen Torch UI. To score new data through the H2O Hydrogen Torch UI:

- In the H2O Hydrogen Torch navigation menu, click Predict data.

- In the Experiment box, select the built experiment you want to use to score new data.

-

In the Dataset and Environment settings section, specify the settings that are displayed.

Note

The problem type of the selected experiment will impact the settings that H2O Hydrogen Torch will display. To learn more, see Predict data (dataset and environment settings)

-

Click Run predictions.

View running or completed prediction (UI)¶

To view a running or completed prediction through the H2O Hydrogen Torch UI:

-

In the H2O Hydrogen Torch navigation menu, click View predictions.

-

In the View predictions table, select the name of the prediction you want to view.

Note

-

To learn how to download a completed prediction, see Download Predictions

-

To learn about the available tabs when viewing a running or completed experiment, see Prediction Tabs

Python environment¶

H2O Hydrogen Torch offers the ability to download a standalone Python Scoring Pipeline that allows you to predict new data utilizing a trained model in any external Python environment.

To download the standalone Python Scoring Pipeline of a built model:

-

In the H2O Hydrogen Torch navigation menu, click View experiments.

-

In the View experiments table, select the name of the experiment (model) you want to download its standalone Python Scoring Pipeline.

Note

-

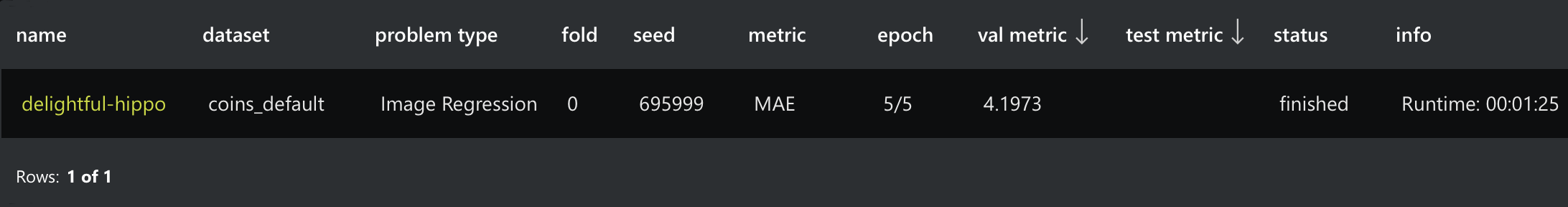

Before selecting an experiment, make sure its status is finished:

- To learn about all the different experiment statuses, see Experiment statuses.

-

A standalone Python Scoring Pipeline is only available for experiments with a finished status.

-

-

Click Download scoring.

With the above in mind, we can quickly summarize the process of using the Python scoring pipeline, as follows:

- Select a finished experiment

- Download the Python Scoring pipeline

-

Install the H2O Hydrogen Torch wheel package in a Python 3.7 environment of your choice

Note

-

The H2O Hydrogen Torch

.whlpackage is shipped with the downloaded Python scoring pipeline.- To install the

.whlpackage run:pip install *.whlwithin the scoring pipeline folder

- To install the

-

A fresh environment is highly recommended and can be set up using

pyenvorconda. For more information, see pyenv or Managing Conda environments. -

The H2O Hydrogen Torch scoring pipeline supports Ubuntu 16.04+ OS with Python 3.7.

-

Ensure that

Python3.7-devis installed for Ubuntu versions that support it. To install it, run:sudo apt-get install python3.7-dev -

Ensure

setuptoolsandpipare up to date, to upgrade, run:pip install --upgrade pip setuptoolswithin the Python environment

-

-

Please refer to the shipped

README.txtfile in the downloaded Python scoring pipeline for more details

-

-

Use provided sample code to score new data using your trained model weights

Note

The sample code comes with the downloaded Python Scoring pipeline inside a file name

scoring_pipeline.py.

H2O MLOps¶

H2O Hydrogen Torch offers an MLOps Pipeline that can be used to directly deploy a trained model to H2O MLOps to score new data using the H2O MLOps REST API.

To download the MLOps Pipeline of a built model:

-

In the H2O Hydrogen Torch navigation menu, click View experiments.

-

In the View experiments table, select the name of the experiment (model) you want to download its MLOps Pipeline.

Note

-

Before selecting an experiment, make sure its status is finished:

- To learn about all the different experiment statuses, see Experiment statuses).

-

An MLOps Pipeline is only available for an experiment with a finished status.

-

-

Click Download MLOps.

With the above in mind, we can quickly summarize the process of using the H2O MLOps REST API:

- Select a finished experiment

- Download the MLOps Pipeline

-

Deploy MLFlow to H2O MLOps

Note

MLFlow (

model.mlflow.zip) comes inside the downloaded MLOps Pipeline -

Score on new data (e.g., image data) by calling the API endpoint. For example:

import base64 import json import cv2 import requests # fill in the endpoint URL from MLOps URL = "enpoint_url" # if you want to score an image, please base64 encode it and send it as string img = cv2.imread("image.jpg") input = base64.b64encode(cv2.imencode(".png", img)[1]).decode() # in case of text, you can simply send the string input = "This is a test message!" # json data to be sent to API data = {"fields": ["input"], "rows": [[input]]} # for text span prediction problem type, pass question and context texts # input = ["Input question", "Input context"] # data = {"fields": ["question", "context"], "rows": [input]} # post request r = requests.post(url=URL, json=data) # extracting data in json format ret = r.json() # read output, output is a dictionary ret = json.loads(ret["score"][0][0])Note

The above code comes inside the downloaded Python Scoring pipeline in a file name

api_pipeline.py. -

The received JSON response from an H2O MLOps REST API call follows the same format as the

.pklfiles discussed in the Download Predictions page. - Monitor requests and predictions on H2O MLOps

- Submit and view feedback for this page

- Send feedback about H2O Hydrogen Torch to cloud-feedback@h2o.ai